Ever stared at a blank text file, terrified that one wrong keystroke could make your entire website disappear from Google? Manually creating a robots.txt file is a high-stakes task filled with stress. One tiny mistake can cause huge SEO headaches, costing you time and traffic. But there’s a better way to get it done.

This guide gives you actionable insights to generate a perfect robots.txt file quickly, giving you peace of mind and letting you get back to growing your business.

Why Creating a Robots.txt File by Hand is a Gamble

On the surface, writing a robots.txt file seems simple. But the manual process is surprisingly easy to get wrong, and the consequences can be severe. This is a classic pain point for anyone managing a website—a tedious, technical task where the risks are high.

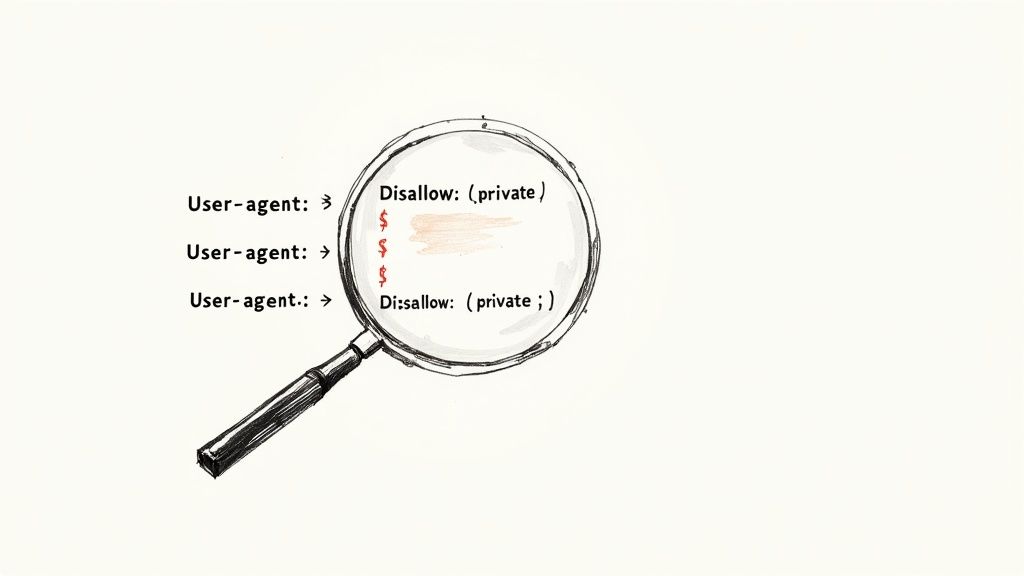

The biggest danger? A tiny syntax error. A missing slash, an extra asterisk, or a simple typo could accidentally tell search engines to ignore your whole site. One minute you’re ranking, the next you’ve vanished from the search results. This isn’t just a technical hiccup; it’s a direct blow to your traffic, leads, and sales.

The Real Price of a Small Mistake

The mental gymnastics required to get every line perfect is draining. You’re stuck obsessing over directives and syntax when you should be focused on what really matters—creating great content and growing your business. It’s like trying to organize a mountain of digital files by hand; the constant worry and wasted time prevent you from being productive.

Many of these mistakes happen because the rules can be confusing. For instance, there are common mistakes like misusing the robots.txt noindex directive that can have the exact opposite effect of what you intended.

The solution is automation. In Switzerland, automation is already central to how businesses operate. In fact, 52% of Swiss organisations are already using AI to automate tasks, a rate that outpaces both European and global averages. It’s a clear sign that smart automation is the way forward for productivity.

Trying to manage a robots.txt file by hand is like directing traffic at a busy intersection with no traffic lights. It’s inefficient, stressful, and one wrong move can bring everything to a grinding halt.

Ultimately, doing it yourself means trading your peace of mind for a constant, nagging worry. Automating the process isn’t just about saving a few minutes; it’s about eliminating risk so you can focus on what you do best.

Choosing the Right Robots Txt Generator

With so many tools out there to generate robots txt files, it’s easy to get lost. The trick is to find one that fits your situation perfectly, saving you time and headaches. Your choice comes down to two things: how complex your website is and how comfortable you are with technical details.

If you’re running a straightforward blog or a simple portfolio, a basic online generator is a perfect, time-saving solution. These are usually simple forms where you tick boxes and pick rules. They’re built to be user-friendly, giving you confidence that you won’t make a syntax mistake.

On the other hand, if you’re managing a massive e-commerce store with thousands of product pages, you’ll need something with a bit more muscle. For sites like that, you need specific rules for different search engines, directives to keep sensitive customer areas private, and a smart way to manage your crawl budget.

Finding the Best Fit for Your Website

To cut through the noise, just focus on what you actually need. A small business owner might find that a WordPress plugin is the perfect solution. Being able to make quick changes directly from the website’s dashboard, without fiddling with FTP, is a huge productivity boost.

For those juggling multiple sites or working on a larger scale, a more robust platform is likely the better choice. A developer, for example, might look for dedicated developer tools for Mac that can help automate the whole process. You can even find highly specialised tools like an LLM Txt Generator that use AI for more advanced control over crawlers.

The goal isn’t to find the most feature-packed generator; it’s to find the one that makes your life easiest. A tool that feels intuitive to you is one you’ll actually use, ensuring your site stays correctly configured without the stress.

To help you see the options more clearly, here’s a quick breakdown of the different types of generators you’ll come across.

Comparison Of Robots Txt Generator Types

This table compares the most common tool types to help you decide which is right for your website and technical skill level.

| Generator Type | Best For | Key Advantage | Potential Drawback |

|---|---|---|---|

| Simple Web Forms | Blogs, small sites, and beginners. | Extremely easy to use with no technical knowledge required. | Limited customisation for complex rule sets. |

| CMS Plugins | WordPress, Shopify, or Joomla sites. | Conveniently integrated directly into your site’s backend. | Can add slight overhead to your website’s performance. |

| SEO Suite Tools | Agencies and large, complex websites. | Part of a larger SEO toolkit with advanced validation features. | Often requires a paid subscription to a larger platform. |

| AI-Powered Platforms | E-commerce, large-scale publishers. | Creates dynamic, optimised rules based on site analysis. | May have a steeper learning curve and higher cost. |

Ultimately, whether you pick a simple online form or an advanced AI platform, the right tool is the one that gives you control without adding complexity to your workflow.

Generating Your First File: A Real-World Example

Alright, let’s turn theory into practice. We’ll walk through a common scenario to show just how fast and easy it is to create a robots.txt file with a generator.

The Pain Point: You run an online shop and need to block Google from indexing sensitive areas like customer accounts, the checkout funnel, and messy internal search results. Writing these rules by hand is risky.

The AI-Powered Solution: A generator gets it done right in a fraction of the time, giving you complete peace of mind.

Here’s a practical example of the rules you’d generate:

First, you’d set a rule for all crawlers using the User-agent: * directive. Think of this as your universal instruction for any bot that comes knocking.

Next, you’ll specify exactly which parts of the site to block. For our e-commerce store, the rules would look something like this:

Disallow: /account/: This keeps customer login and profile pages private and out of the search results.Disallow: /checkout/: No need for search engines to crawl the multi-step purchasing process.Disallow: /search/: This prevents the often chaotic and duplicate-content-rich internal search results from being indexed.

Finally, you’ll want to point crawlers to your most important pages. Just add a sitemap link, like Sitemap: https://yourstore.com/sitemap.xml. And that’s it. In just minutes, you’ve created a clean, effective robots.txt file without the stress.

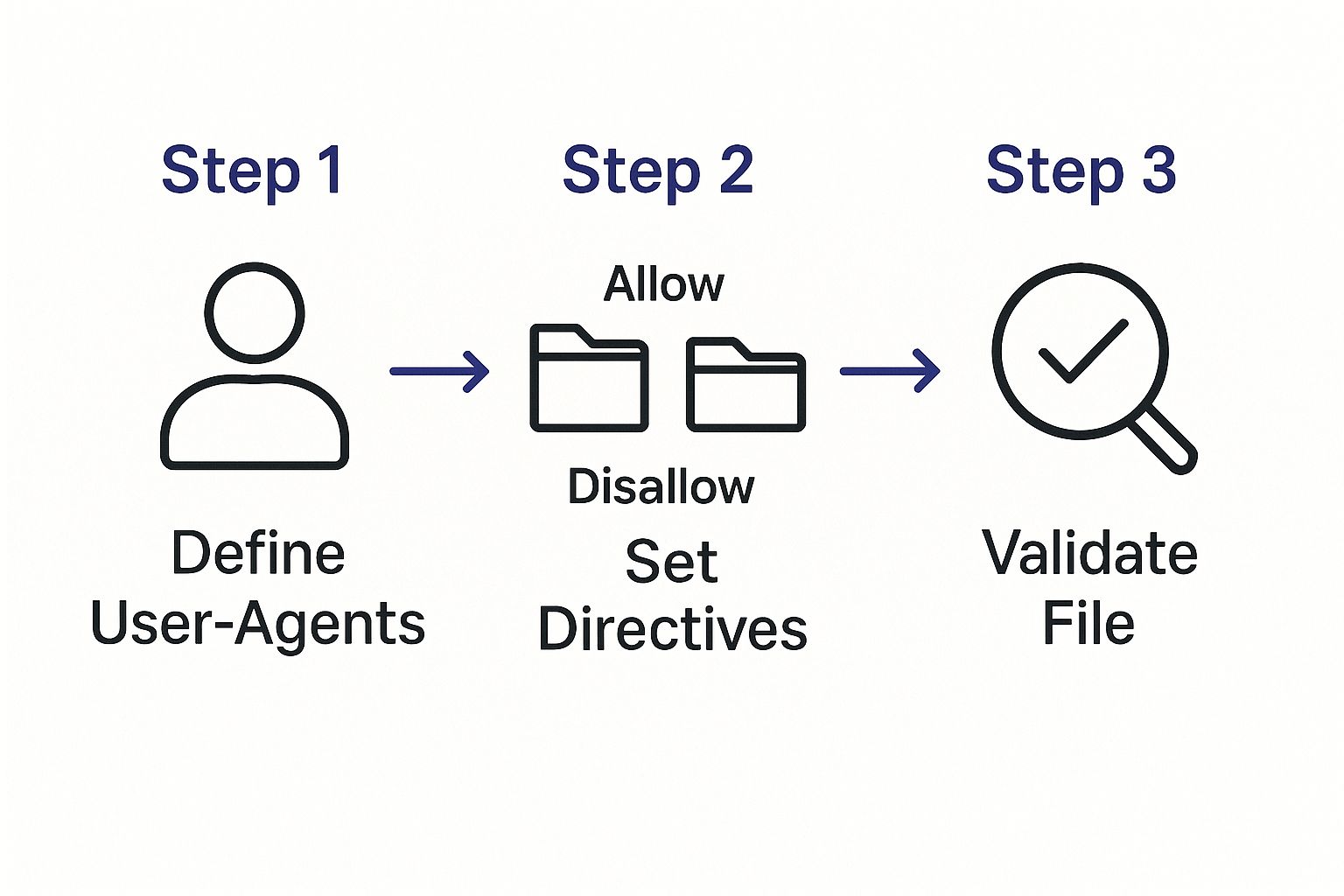

Understanding the Process Visually

If you’re a visual person, this simple graphic breaks down the entire workflow into three clear stages, from defining the rules to validating the final file.

As you can see, creating a robots.txt file is a structured process, not just guesswork. It’s about following a clear path, which gives you real peace of mind. This kind of automation is becoming the norm, especially in the Swiss technology market. With the local robotics market projected to hit US$895.69 million by 2025 and AI investments on the rise, automating technical SEO tasks is just smart business. You can find more data on the Swiss robotics and AI landscape on Statista.com.

Don’t Forget to Validate Your New File

Once you’ve generated the code, there’s one last crucial step: validation. Think of it as a final safety check to make sure your rules work exactly as you expect before you push the file live.

For developers and webmasters, having a quick way to validate is a huge timesaver. Speaking of efficiency, if you’re looking for other ways to speed up your workflow, our guide on how to install Homebrew on Mac for a productivity boost is a great place to start.

The “Allowed” status gives you the green light, confirming your key pages are still crawlable while the new rules are correctly blocking the areas you specified. No more worrying about accidentally disallowing your entire site!

Key Takeaway: Combining a generator with a validator transforms a high-stakes technical task into a safe, predictable, and efficient process. It gives you confident control over crawler access without the fear of making a costly mistake.

How to Test and Deploy Your New File Safely

Alright, you’ve drafted your robots.txt file. Before you rush to upload it, hold on. A single misplaced character in that file can make your entire site invisible to search engines. It happens more often than you’d think.

This is why a quick check before deploying is non-negotiable. It’s the difference between controlling search bots and accidentally telling Google to ignore you completely. Think of it as proofreading a critical email one last time before hitting send for ultimate peace of mind.

Validating Your New Rules

The best way to check your work is to run it through a proper validator. I always recommend starting with Google’s own robots.txt Tester , which you can find inside Google Search Console. It’s free, it’s accurate, and it tells you exactly how Google sees your rules.

Using a tool like this gives you a crucial safety net. It helps you:

- Catch Syntax Errors: It’ll instantly flag any typos or formatting mistakes that would otherwise break the file.

- Test Specific URLs: Wondering if your new rule blocks the blog but allows the product pages? Just pop in a URL and see for yourself.

- Confirm Crawler Access: This is the big one. The tool simulates how Googlebot interprets your directives, removing all the guesswork.

Once the validator gives you the all-clear, you know the file is technically sound and ready for action.

This final check is the difference between hoping your file works and knowing it works. It’s a simple step that protects all your hard-earned SEO.

Uploading Your File Correctly

With a validated file in hand, it’s time to get it live. This part is simple but very specific: the robots.txt file must go in the root directory of your website.

This means it needs to be accessible at a URL like https://www.yoursite.com/robots.txt. It absolutely cannot be in a subfolder like /blog/ or /assets/.

You can usually upload the file through your web host’s control panel (cPanel, Plesk, etc.) or with an FTP client like FileZilla. Once it’s uploaded, do one final check: type the URL into your browser. If you can see the text file you just made, you’re all set. Job done.

Alright, you’ve got a basic robots.txt file set up. Now, let’s turn it into a powerhouse for your SEO by optimising your crawl budget.

Think of it this way: search engines only have a certain amount of time and resources to crawl your site. You want to make sure they spend that precious time on your most important pages, not getting stuck on the stuff that doesn’t matter for ranking. By being strategic, you can guide crawlers straight to your best content.

Getting Granular with Crawler Access

One of the biggest culprits for wasting crawl budget, especially on e-commerce sites, is faceted navigation. You know, those filters for size, price, or colour. Every time a user clicks a new filter combination, it often generates a new URL. The content is basically the same, just sorted differently, which is a huge waste of a crawler’s time.

We can fix this by blocking URLs that contain specific parameters. For instance:

Disallow: /*?colour=tells bots to ignore any URL that has the “colour” filter applied.Disallow: /*?price-range=stops them from crawling every single price variation you offer.

The same principle works perfectly for your internal site search results. Those pages are useful for users, but they hold virtually no SEO value. A simple line like Disallow: /search/ is all it takes to keep crawlers away from them and focused on your actual content.

A well-tuned

robots.txtfile does more than just block pages. It essentially creates an express lane for search engines to find, crawl, and ultimately rank the content you care about most. It’s a tiny file with a massive impact on your site’s efficiency.

Handling Specific Bots and User Agents

Sometimes, you don’t want to block all bots—just specific ones. This has become particularly relevant as many site owners now want to generate robots txt rules to stop AI data scrapers from hoovering up their content to train large language models.

You can target these bots directly by specifying their user agent. For example:

User-agent: GPTBotDisallow: /

These kinds of advanced moves give you precise control. This is becoming increasingly important in Switzerland, as new local regulations and AI models emerge. The government is working on rules that align with the EU’s AI Act, which will shape how automated tools can interact with websites and content. You can get a sense of what’s new for AI in Switzerland for 2025 on swissinfo.ch .

Common Questions About robots.txt

Let’s tackle some of the most common questions that pop up when you’re working with robots.txt files. Getting these right will save you a lot of headaches down the road.

Is Blocking a URL the Same as Using ’noindex'?

This is probably the biggest point of confusion. Think of it this way: blocking a page in robots.txt is like putting a “do not enter” sign on a door. It tells crawlers not to visit the page.

However, if other websites link to that blocked page, Google can still see those links and might decide to index the URL anyway, even without crawling its content. To be absolutely certain a page stays out of search results, you need to use a ’noindex’ meta tag directly on the page itself.

Can I Stop Specific Bad Bots From Scraping My Site?

You absolutely can. While robots.txt won’t stop a truly determined scraper, you can disallow user agents that are known for being overly aggressive or just plain spammy. This can be a good way to protect your server from being overloaded and your content from being scraped.

A key thing to remember is that

robots.txtworks on the honour system. Legitimate bots from Google and Bing will follow your rules, but malicious bots will ignore them entirely. It’s a guideline, not a fortress.

How Often Should I Check My robots.txt File?

It’s not a “set it and forget it” file. A good rule of thumb is to give it a quick review every quarter. You should also make a point to check it anytime you make major changes to your site, like adding a new blog section or changing your URL structure.

Keeping your robots.txt up-to-date ensures it’s always working for your SEO strategy, not against it. For more tips on keeping your SEO in top shape, check out the articles on the Fileo blog

.

Ready to finally organise your digital life without the manual work? Try Fileo and let AI handle the filing for you. Get started with Fileo .